In this article, we will explore the scenario of providing customers with the ability to instantly ask questions about their own data and documents stored within your enterprise or bank.

Many applications and platforms offer functionality for organisations to upload static documents and interact with chatbots to answer questions about these documents. These interactions typically take the form of Q&As, FAQs, or responses based on internal organizational documents, such as those found on intranet sites. However, what if we want our generative AI and large language models (LLMs) to answer questions based on specific documents and data belonging to individual customers for our enterprise or bank?

Let's consider a practical scenario in which a customer is finalizing a mortgage agreement with a bank. The bank's mortgage advisor uploads the mortgage contract to the customer's account within the bank's digital banking platform.

The customer can then review the document via the bank's website or mobile app to understand all the terms and conditions before signing the contract. However, to enhance this process, what if the customer could ask questions about their specific mortgage contract using the bank's chatbot, instead of reaching out to a human mortgage advisor?

To add more complexity to the scenario, let’s assume that besides the mortgage contract, other customer-specific data stored in the bank’s database also influences the mortgage decision. For instance, personal data, income details, Know Your Customer (KYC) information, and Prominent Influential Person (PIP) status may all play a role in determining the mortgage offer, and thus they should be included in any answers to the customer's questions on the mortgage offer.

With this solution, the bank’s chatbot can provide the customer with answers to their questions by leveraging both the uploaded mortgage contract and the relevant customer data stored in the bank’s systems. This would enable the customer to inquire about their entire mortgage offer and receive contextually accurate responses derived from both the provisional mortgage contract and their personal data.

Let's design this solution together, focusing on how we can seamlessly integrate generative AI and LLMs to create an intelligent, personalised customer experience within the banking sector.

The User Journey:

- The bank's mortgage advisor uploads the mortgage contract to the customer's account within the bank's digital banking platform.

- The mortgage advisor enters the KYC, PIP, and income data provided by the customer into the bank's internal system.

- The uploaded mortgage contract is now available on the bank's digital platform, along with the customer's personal and KYC data stored in the bank's database.

- The customer needs to fully understand the terms of the mortgage offer before signing it. To achieve this, they can ask the bank's chatbot various questions about the offer.

- The chatbot provides answers based on a combination of the customer's uploaded mortgage contract and their personal data stored in the bank's database.

This streamlined process ensures that customers have instant access to critical information about their mortgage offers. It enhances their understanding and boosts their confidence in making informed decisions without needing direct human intervention.

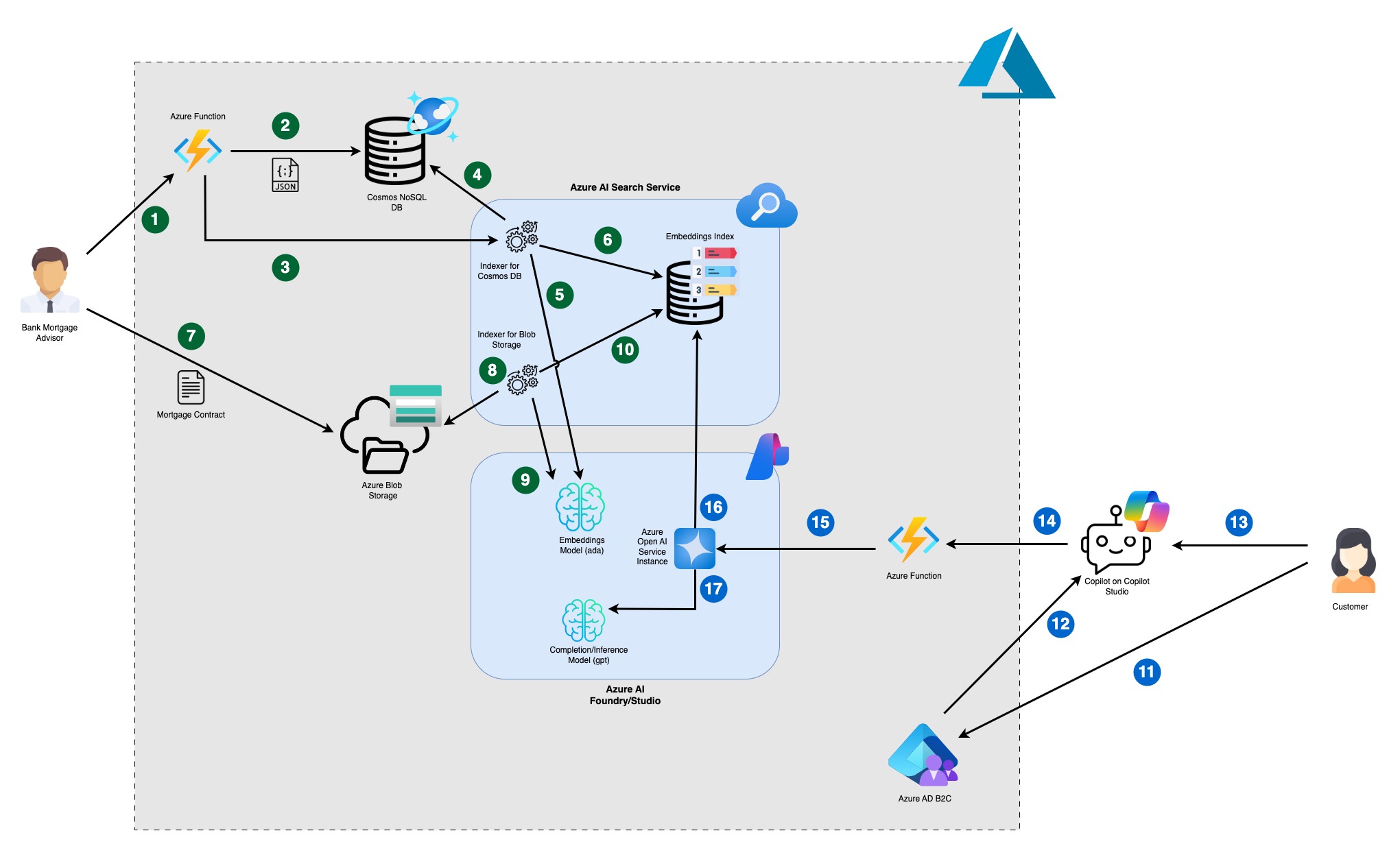

Solution Architecture

Mortgage Advisor Data Flow

The data flow begins with the bank's mortgage advisor, who uploads the provisional mortgage contract and submits the customer data via the bank's internal application. These steps are marked with green circles in the diagram.

- The application calls a backend Azure Function to insert the customer’s specific data like KYC and PIP data into the database.

- The data is stored in Azure Cosmos NoSQL DB as JSON documents.

- Once the data is inserted, the Azure Function triggers the configured Cosmos DB indexer on Azure AI Search service to ensure the data is immediately available for customer queries.

- The indexer retrieves the newly added documents from the database.

- The indexer then utilises Open AI's text-embedding-ada model (deployed on Azure AI Foundry) to segment the content of the new documents into chunks and generate embeddings for each segment, following a standard RAG (Retrieval-Augmented Generation) flow.

- The indexer stores the embeddings for the segmented JSON documents in the overall index. The indexer and its configuration are part of Azure AI Search service

Next, the mortgage advisor uploads the mortgage contract for customer review:

- Using the bank's internal application, the PDF document is uploaded directly to Azure Blob Storage.

- The configured blob storage indexer is configured to run automatically whenever new files are added to the blob storage.

- The indexer processes the PDF document by chunking its content and using the same ada model to generate embeddings for each chunk.

- The indexer then adds the embeddings for the chunked PDF document to the same index. As a result, the index now contains embeddings from both data sources: files in storage and database records. This overall and combined index is ready to be queried by customers.

Customer Data Flow

Once the data is prepared, the customer can interact with the system using Copilot to ask questions about their mortgage contract and related data. The steps are marked with blue circles in the diagram.

- The customer opens the chat widget and authenticates. Authentication is configured through Microsoft Copilot Studio to use the customer's instance of Azure Active Directory B2C.

- After successful authentication, the user ID becomes available in the Copilot flow.

- The customer submits a question through the chat interface.

- Copilot sends the user ID along with the customer’s question to Azure Function.

- The Azure Function calls the Azure OpenAI Service using the Azure OpenAI SDK.

- Following a typical RAG flow, the Azure OpenAI Service converts the question/prompt into embeddings and queries the index to retrieve the closest matching records.

- The relevant embeddings are then passed as context to a completion model, such as GPT-4, to generate a detailed answer. The response is returned to the customer through the chat interface.

Key Highlights

Data Filtering

One of the key challenges is ensuring that the chatbot only responds with data and files belonging to the logged-in customer, especially since the database and file storage contain information for many different customers.

For the data in the database, this challenge can be addressed by adding metadata, such as the customer ID, when indexing the data. The indexer can be configured to use a field in the JSON document from the database as a new field in the index record. This configuration is done through the indexer’s JSON field mappings.

A new field, "user_id," should be added to the index configuration and marked as filterable as well. This is essential because filtering will be applied when querying the index to ensure responses are restricted to the relevant customer’s data.

For file uploads, the "user_id" must be included as metadata in the file using custom request headers when calling the blob storage endpoint. Using a similar mapping in the blob storage indexer, the user_id field will be filled in correctly as well in the combined index for the embeddings records.

Since now, we have the "user_id" as a field in all the embeddings records in the index, we can now use Azure Open AI SDK, to query the data and with the specific filter with the user_id equals the logged in user ID. Some details are explained in Azure documentation here.

Copilot - Azure Open AI Integration

When working with Copilot Studio, it may initially seem that the easiest way to integrate with Azure Open AI Service is by using the Generative Answers node. However, this approach poses significant challenges in ensuring that the responses from the chatbot are limited to predefined data sources, and not answering from the LLM's general knowledge.

One of the main obstacles as well was setting up content filtering to ensure that the chatbot only uses data associated with the logged-in customer. The Generative Answers node does not offer straightforward options to achieve this user-based filtering.

Alternative Solution: Using the HTTP Request Node

To overcome these challenges, we opted to use the HTTP Request node instead. By calling our Azure Function directly through this node, we gained more control over the data filtering process. The Azure Function handles the specific calls and applies the necessary filters using the Azure Open AI SDK.

This approach offers several advantages:

- The filtering logic is centralized within the Azure Function, making it easier to manage and maintain.

- The Azure OpenAI API key is securely stored and accessed by the backend Azure Function. This eliminates the need to expose the API key in the front-end channels, such as Copilot or a web application, enhancing security.

The Value of Microsoft Copilot

In this example, we are demonstrating Microsoft Copilot and Copilot Studio as the chosen chat platform due to its widespread adoption by enterprises. However, when integrating Generative AI (Gen AI) and large language models (LLMs) as the backbone of conversations, the value of Microsoft Copilot's traditional no-code modeling approach becomes less relevant.

With Gen AI and LLMs, the model inherently recognizes context and understands user intent without the need for complex workflows involving predefined topics and intents. This capability reduces the reliance on Copilot Studio’s traditional no-code approach, which can be slower and more complex. However, Microsoft Copilot still provides significant value through its seamless integration with the Power BI platform and specific workflows such as escalations, especially when integrated with Dynamics 365 Contact Center.

A Hybrid Approach for Enhanced Functionality

Given these considerations, we adopt a hybrid approach in this example. All conversations are forwarded to the LLM for processing and response generation. However, we initiate these interactions through Copilot, leveraging its valuable integrations and ecosystem. This approach allows us to benefit from the strengths of both Copilot’s platform integrations and the advanced conversational capabilities of LLMs.

Simplified Chat Options for Less Complex Use Cases

If your use case does not require complex integrations with other enterprise systems, you can opt for a simpler solution by utilising a standard chat widget available on the internet. These widgets can be easily embedded into your applications to provide basic conversational functionality without the overhead of integrating with Copilot’s ecosystem.

Conclusion

This article provided a high-level overview of how enterprises can enable their customers to ask questions about their specific documents and data, rather than relying on static, generic content. By leveraging a hybrid approach that integrates Microsoft Copilot with Gen AI and LLMs, organizations can enhance customer experience and offer personalized, context-aware interactions.

The solution included integration between various Azure data and AI services, like Azure AI Search, Azure Open AI, Azure Cosmos DB, Azure Blob Storage, and with Microsoft Copilot.

In upcoming articles, we will explore the detailed configuration of each step in this architecture. This will include practical guidance on setting up the various components, especially security configuration between the different services and user authentication, with sample code on calling the various services.

Stay tuned.

Let's not overlook the ongoing genocide and massacre in Gaza at the hands of the Israeli Occupation Forces. Over 50,000 lives have been lost, with more than 25,000 of them being women and children. More than 100,000 have been injured or amputated.

Gaza has now become home to the largest population of child amputees in the world. FREE PALESTINE 🇵🇸🇵🇸🇵🇸